The strong converse for a coding theorem shows that the optimal asymptotic rate possible with vanishing error cannot be improved by allowing a fixed error. Building on a method introduced by Gu and Effros for centralized coding problems, we develop a general and simple recipe for proving strong converse that is applicable for distributed problems as well.

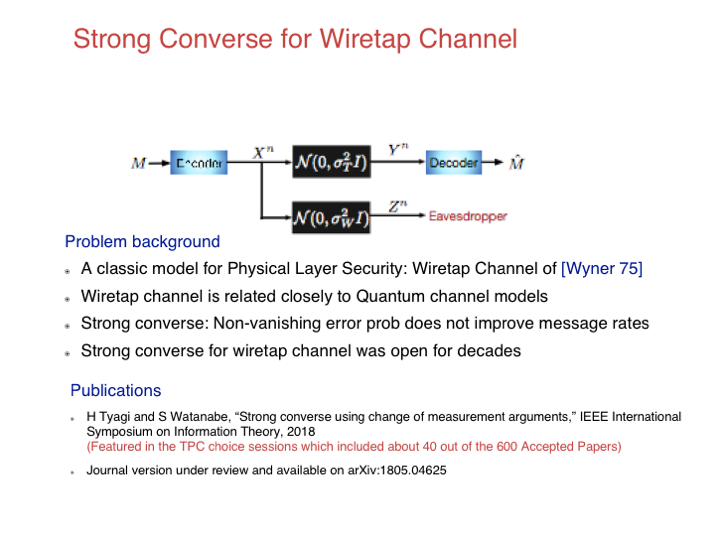

Heuristically, our proof of strong converse mimics the standard steps for proving a weak converse, except that we apply those steps to a modified distribution obtained by conditioning the original distribution on the event that no error occurs. A key component of our recipe is the replacement of the hard Markov constraints implied by the distributed nature of the problem with a soft information cost using a variational formula introduced by Oohama. We illustrate our method by providing a short proof of the strong converse for the Wyner-Ziv problem and new strong converse theorems for interactive function computation, common randomness and secret key agreement, and the wiretap channel.